Reading large CSV files with CFML

As its name suggests, the Spreadsheet CFML library is focused on working with spreadsheets, in either binary or XML format.

But there's a third format which is often used for the same kind of data: CSV.

For a while now, the library has provided a few convenience methods for working with CSV which allow conversion to and from spreadsheets and CFML queries.

I'll admit though that these methods don't perform very well when dealing with large CSV files. For various reasons, the reliance on CFML query objects means that large amounts of CSV require large amounts of memory.

To illustrate, let's fire up a Lucee 6 instance with just 128MB of memory assigned to the heap. We'll first use the library's csvToQuery() method to read a reasonably sized CSV file with 100,000 rows and 15 columns of auto-generated test data, weighing in at just under 14MB.

<cfscript>

spreadsheet = New spreadsheet.Spreadsheet();

path = "c:/temp/normal.csv";

result = {};

startTime = GetTickCount();

data = spreadsheet.csvToQuery( filepath=path, firstRowIsHeader=true );

endTime = GetTickCount();

result.milliseconds = endTime-startTime;

result.recordcount = data.recordcount;

WriteDump( result );

</cfscript>This completes in a shade over 2 seconds, which is perfectly fine if you are only dealing with files of or below this size.

But what if you're tasked with processing significantly more data? Let's try the same code with large.csv, a half-gigabyte file containing 1 million rows each with 50 columns.

No joy.

ReadCsv()

CFML expert Brad Wood was recently among those pointing out this shortcoming, and with his help I've come up with a new method focused on reading large CSV files as efficiently as possible: readCsv().

It achieves this by dispensing with CFML query objects as a data store and by working more directly with the underlying Apache Commons CSV java library so that the file is read line by line rather than being pre-loaded into memory.

Not only does csvToQuery() read the whole file up front, it also then builds an array from the parsed CSV records and uses that to generate and return a separate query object - all of which adds up to a substantial memory requirement.

By contrast readCsv() only builds an array and, as we'll see later, even that is optional.

Let's give it a spin with large.csv.

<cfscript>

spreadsheet = New spreadsheet.Spreadsheet();

path = "c:/temp/large.csv";

result = {};

startTime = GetTickCount();

parsed = spreadsheet.readCsv( path )

.withFirstRowIsHeader()

.intoAnArray()

.execute();

endTime = GetTickCount();

result.milliseconds = endTime-startTime;

result.recordcount = parsed.data.Len();

WriteDump( result );

</cfscript>And when we run it we get...

Ah... While less memory is being used now, returning an array of 1 million records inevitably requires a certain amount of available heap and we still don't have enough.

Record-wise approach

This is where a brilliant suggestion from Brad comes into play: parse the CSV file, but don't return anything. Wait, what? How can my CFML application process the data if nothing is returned?

The answer is: by passing in a User Defined Function (UDF) as a closure which will process each row of the data as it's being parsed rather than waiting for the entire set to be loaded into memory.

To simulate some record-wise data processing let's write a short UDF which simply adds the number of values in each row to a running tally and tracks the number of the last row to be processed. In a real-world scenario we might be importing the rows into a database or other such transfer or transformation.

<cfscript>

spreadsheet = New spreadsheet.Spreadsheet();

path = "c:/temp/large.csv";

result = {};

// start a tally

result.totalValuesProcessed = 0;

// track the last row processed

result.lastRowProcessed = 0;

// define a UDF to process each row

rowProcessor = function( values, rowNumber ){

result.totalValuesProcessed = ( result.totalValuesProcessed + ArrayLen( values ) );

result.lastRowProcessed = rowNumber;

}

startTime = GetTickCount();

spreadsheet.readCsv( path )

.withFirstRowIsHeader()

.withRowProcessor( rowProcessor )

.execute();

endTime = GetTickCount();

result.milliseconds = endTime-startTime;

WriteDump( result );

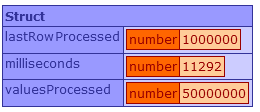

</cfscript>The UDF takes the array of values parsed from the current row as its first argument and the number of that row as its second. It uses those arguments to update the tracking variables in the result. We then store it in the rowProcessor variable and pass it into the withRowProcessor() method. This time execution results in:

Success! It may have taken over 11 seconds, but even with this memory-starved instance we were able to process 1 million rows of CSV data and 50 million values.

Thanks again to Brad for this and other suggested improvements to CSV handling about which you can find out more in the documentation.